Migrating Oracle to PostgreSQL

Peter Goldthorp, Dito June 2022

Oracle licenses are expensive. Significant savings can be made by migrating from Oracle to PostgreSQL. Google offers a number of options to migrate Oracle databases running on-prem or in BMS to PostgreSQL. This document describes the process to migrate a development database from Oracle to PostgreSQL.

Setup

- Use Dito’s Bare Metal Solution inaBOX terraform code to create an IAP protected, container optimized GCP VM with Visulate Ora2pg installed.

- Install Visulate for Oracle from Google Cloud Marketplace

- Create a target environment in CloudSQL, AlloyDB or a VM with PostgreSQL installed.

Assess

The first step in a migration from Oracle to Postgres is to evaluate the systems that depend on Oracle. Identify each application in the target environment and document its components. An application comprises all the software components that interact to provide a service. Examples could include a Windows based client making client server connections to a PL/SQL api, a 3-tier application that uses Apache modules to connect to a database and run SQL statements defined in the mid-tier or web services based on WSDL and SOAP.

For each application, document the technology used, system resource requirements, dependencies and business priority. Differentiate between custom developed and commercial off-the-shelf systems (COTS). For COTS based systems see if the vendor supports a choice of database. Locate the source code for custom developed systems and verify there are no contractual limitations that prevent modification (e.g. if developed by outside contractors who retain copyright). Estimate the quantity and complexity of SQL in database modules.

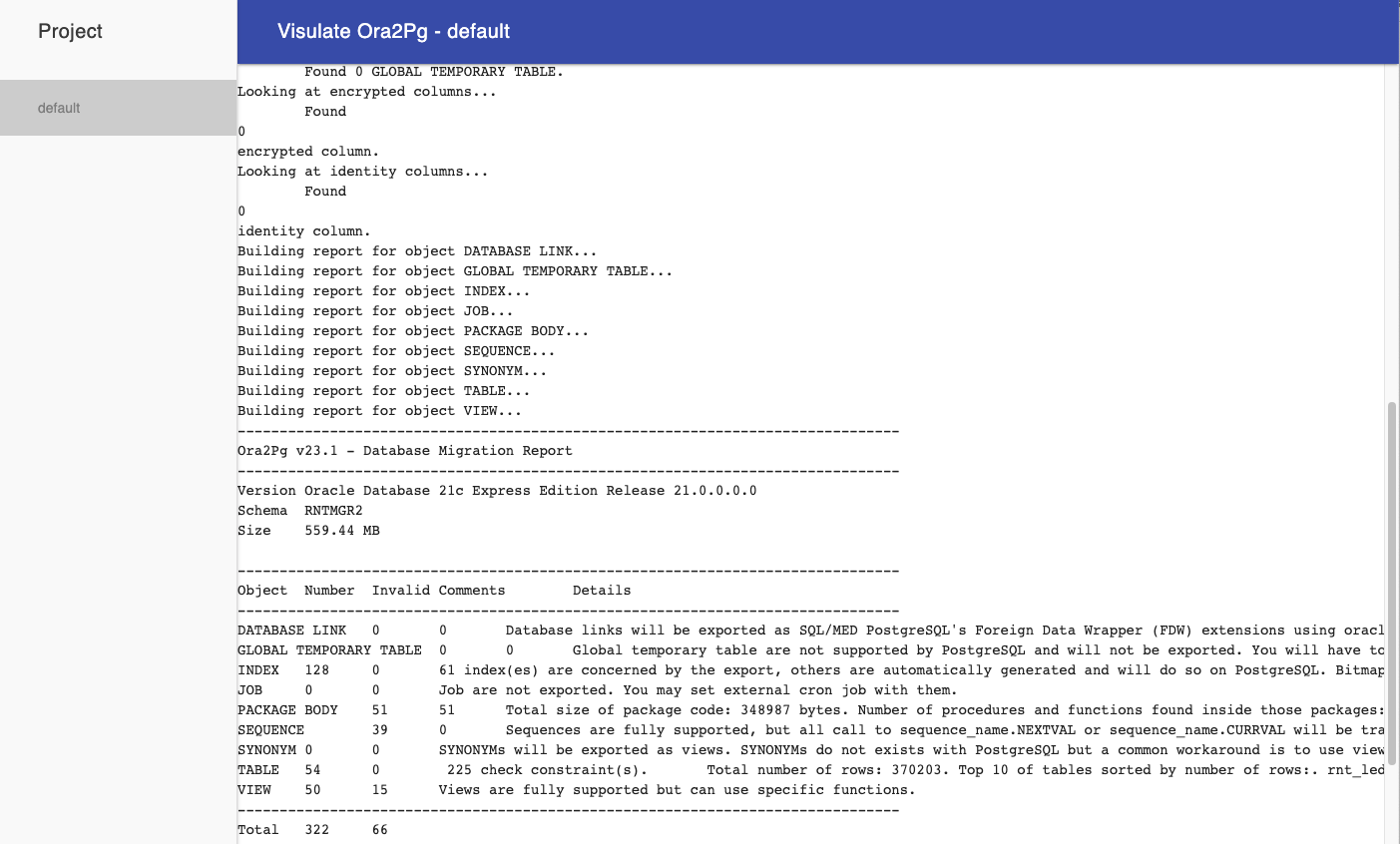

Identify the databases and database schemas associated with each application system. Run the Ora2Pg Database Migration Report on the the schemas to estimate their size and migration complexity

Sample output:

| Object | Number | Invalid | Details |

|---|---|---|---|

| DATABASE LINK | 0 | 0 | Database links will be exported as SQL/MED PostgreSQL’s Foreign Data Wrapper (FDW) extensions using oracle_fdw. |

| GLOBAL TEMPORARY TABLE | 0 | 0 | Global temporary tables are not supported by PostgreSQL and will not be exported. You will have to rewrite some application code to match the PostgreSQL temporary table behavior. |

| INDEX | 128 | 0 | Bitmap will be exported as btree_gin index(es). Domain index are exported as b-tree but commented to be edited to mainly use FTS. Cluster, bitmap join and IOT indexes will not be exported at all. Reverse indexes are not exported too, you may use a trigram-based index (see pg_trgm) or a reverse() function based index and search. Use ‘varchar_pattern_ops’, ‘text_pattern_ops’ or ‘bpchar_pattern_ops’ operators in your indexes to improve search with the LIKE operator respectively into varchar, text or char columns. |

| JOB | 0 | 0 | Job are not exported. You may set external cron job with them. |

| PACKAGE BODY | 51 | 1 | Total size of package code: 348987 bytes. Number of procedures and functions found inside those packages: 354. |

| SEQUENCE | 39 | 0 | Sequences are fully supported, but all call to sequence_name.NEXTVAL or sequence_name.CURRVAL will be transformed into NEXTVAL(‘sequence_name’) or CURRVAL(‘sequence_name’). |

| SYNONYM | 30 | 0 | SYNONYMs will be exported as views. SYNONYMs do not exists with PostgreSQL but a common workaround is to use views or set the PostgreSQL search_path in your session to access object outside the current schema. |

| TABLE | 54 | 0 | 225 check constraint(s). Total number of rows: 370203. Top 10 of tables sorted by number of rows:. rnt_ledger_entries has 90284 rows. rnt_payment_allocations has 54703 rows. rnt_city_zipcodes has 41754 rows. rnt_zipcodes has 41754 rows. rnt_cities has 33154 rows. rnt_accounts_payable has 26378 rows. rnt_pt_rules has 21000 rows. rnt_payments has 17289 rows. rnt_accounts_receivable has 9270 rows. rnt_accounts has 7953 rows. Top 10 of largest tables:. |

| VIEW | 50 | 3 | Views are fully supported but can use specific functions. |

| Total | 352 | 4 |

Document findings in a spreadsheet or Google Doc that identifies the following:

- Application name

- Short description

- Stakeholders (business and technical)

- Business priority/criticality (High, Medium or Low)

- System requirements

- Storage requirements

- Technology stack

- COTS or custom developed

- Source code location

- Database schema

- Acceptable downtime (e.g. hours, minutes or none at all)

- Migration complexity

- Comments

Plan

Select an application to migrate. Start with one with one where the migration complexity is medium or low, the business criticality is moderate and the database size is less than 100GB. Perform a detailed analysis of the system. Develop a test plan and identify test resources.

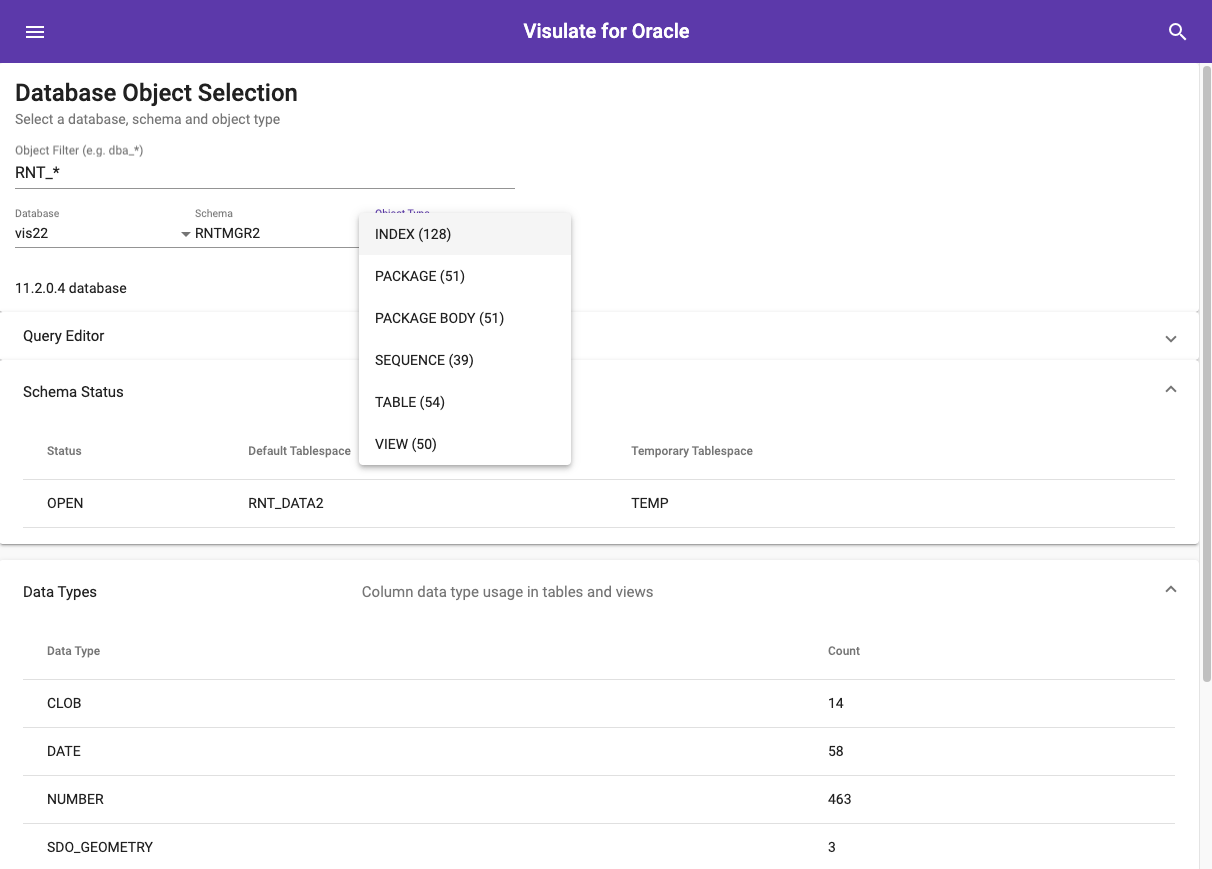

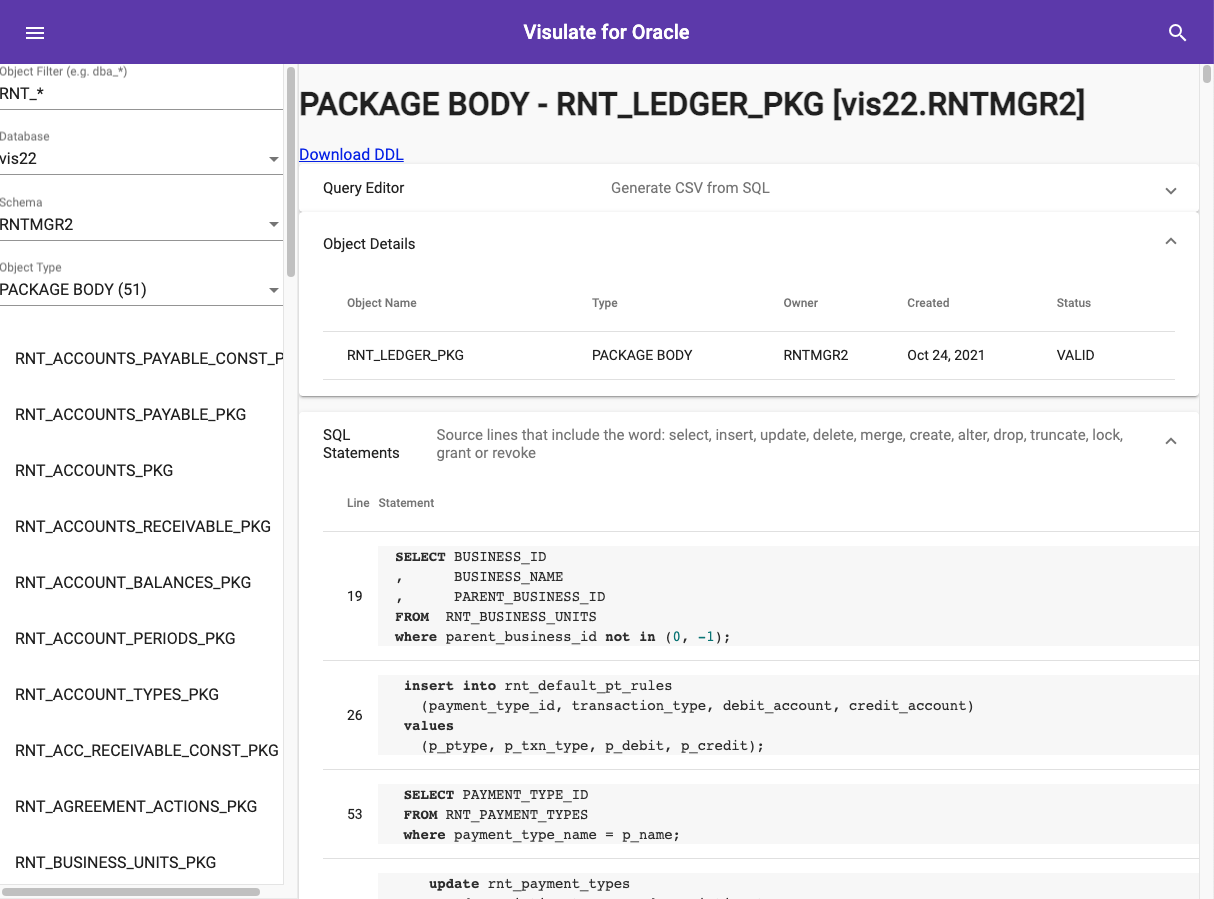

Use Visulate for Oracle to review the structure and content of the database schemas.

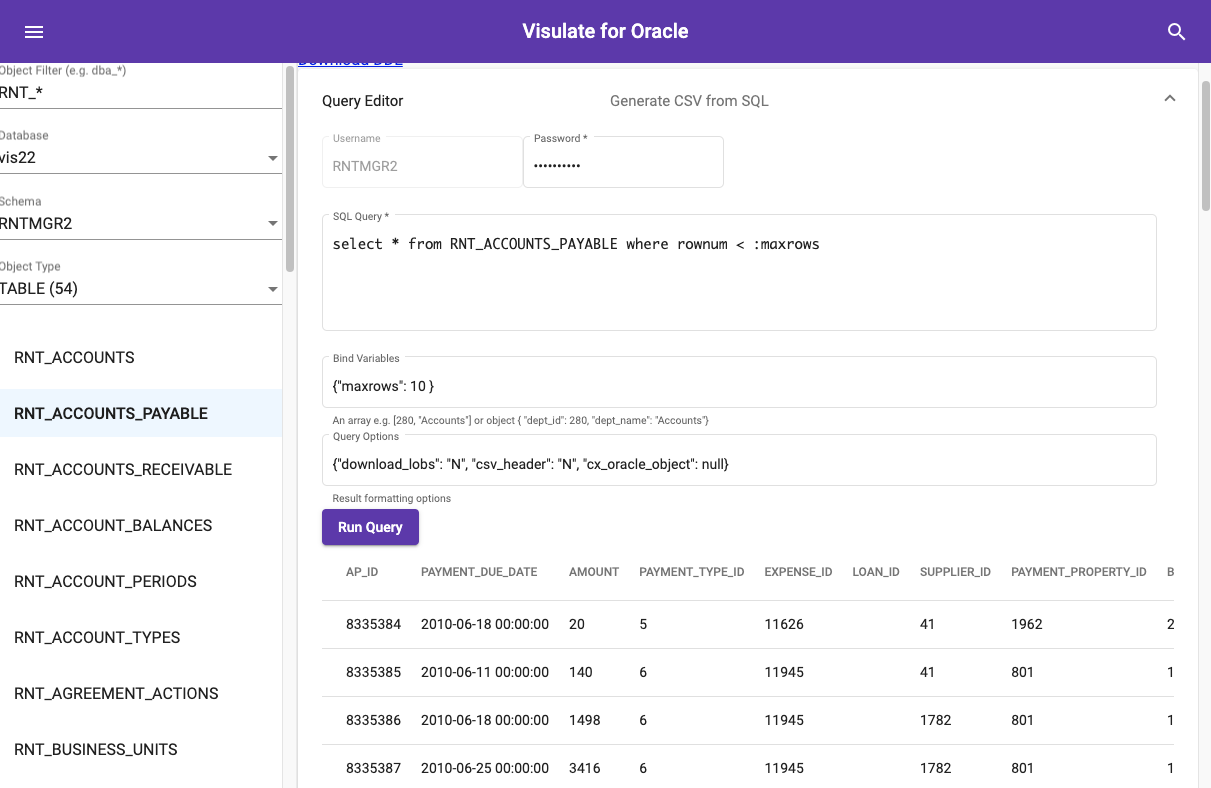

Use the query table feature to review data format and evaluate system performance. Benchmark expensive queries.

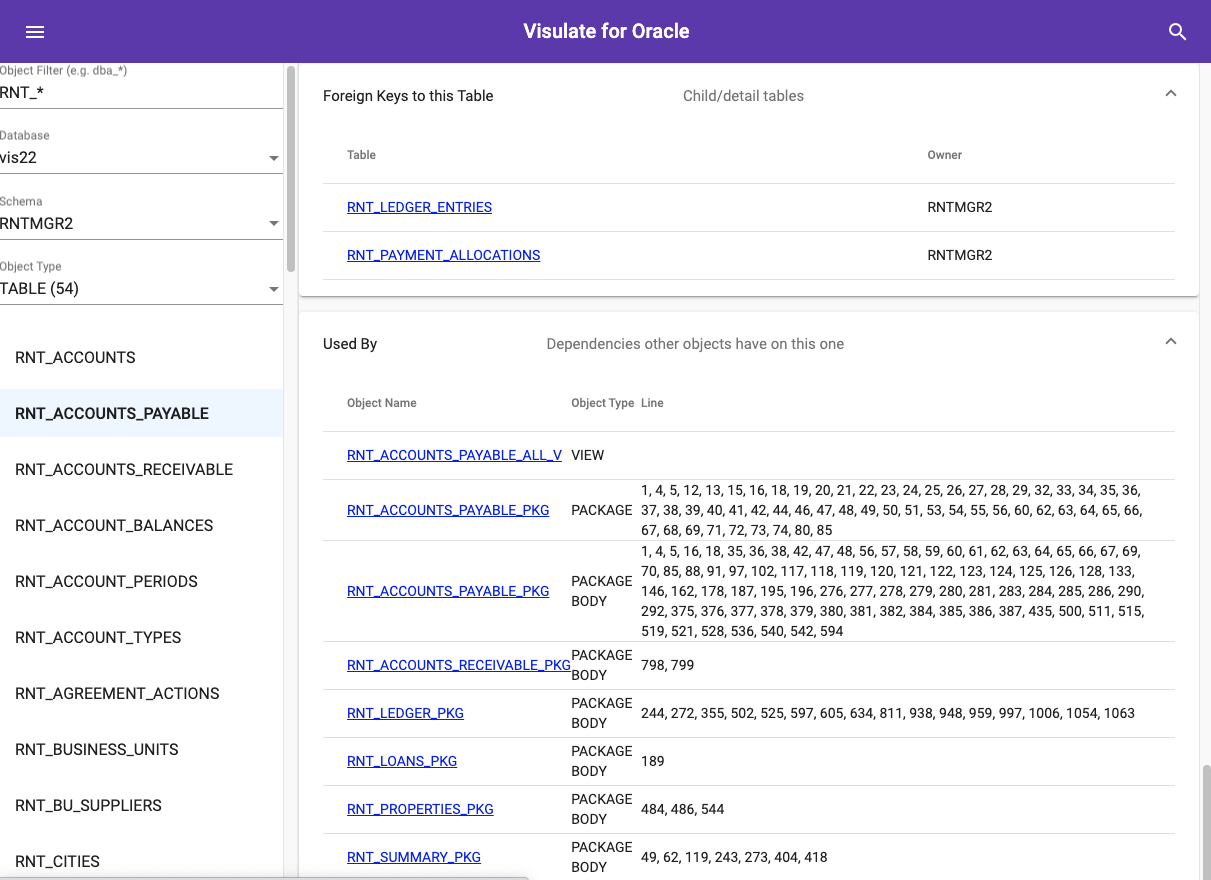

Identify dependencies between database objects.

Review the SQL and business logic encapsulated in PL/SQL programs. Does it appear to have been hand written or auto-generated? Look for stale data and redundant code. For example, historical records that are no longer used and invalid packages or procedures. Where possible these should be deleted before starting the migration.

Examine the source for application code that interacts with the database. Does the SQL operate on tables directly or is it abstracted using views and stored procedure calls? Look for Oracle specific syntax (outer joins expressed with (+), selects from DUAL, calls to SYSDATE etc). Identify a migration approach based on this analysis.

Most migrations can be categorized as a conversion or a rewrite. A conversion migration is one where the database is changed with minimal changes to the rest of the tech stack. A rewrite is one that makes application changes in addition to updating the database engine. Conversions work best when most of the business rules are implemented in application code and the database is mainly used as a relational store. Consider a rewrite for systems that have implemented a significant amount of business logic in PL/SQL. It may be preferable to externalize this logic in an API microservice instead of converting to PL/pgSQL.

Deploy

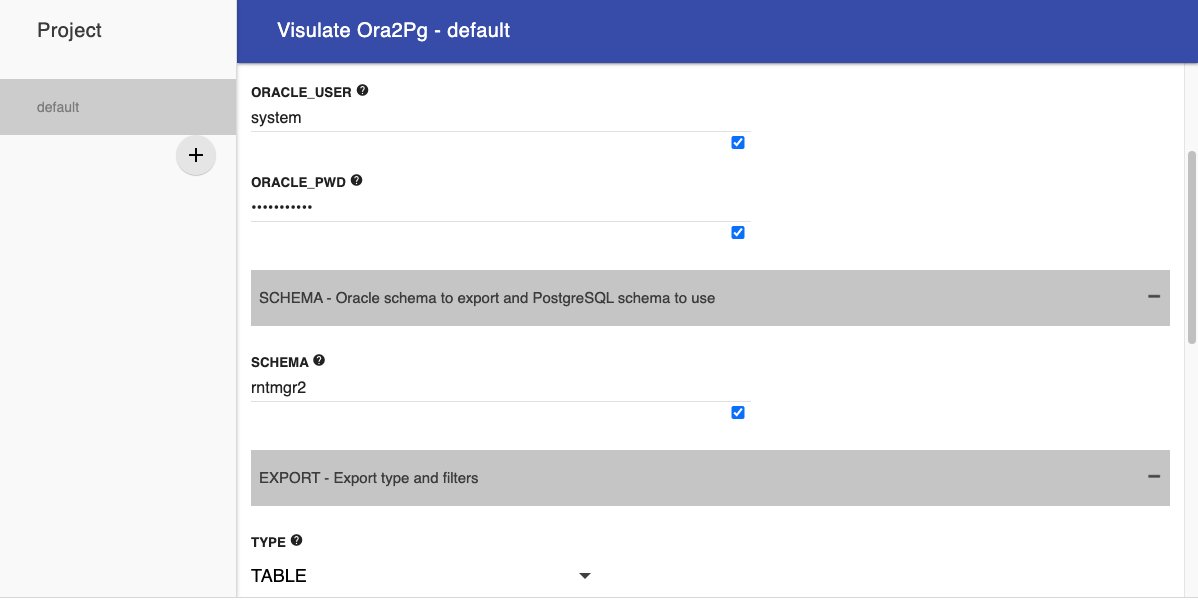

Use Visulate Ora2Pg to generate DDL for database objects. Ora2Pg is an open source application that supports Oracle to PostgreSQL migrations. Visulate Ora2Pg is a container bundled version of it that adds a single page web application UI to control configuration and execution. Enter SYSTEM credentials and connect details in the Input section. Select an object type (e.g. TABLE) in the TYPE field and hit the RUN button at the top of the page.

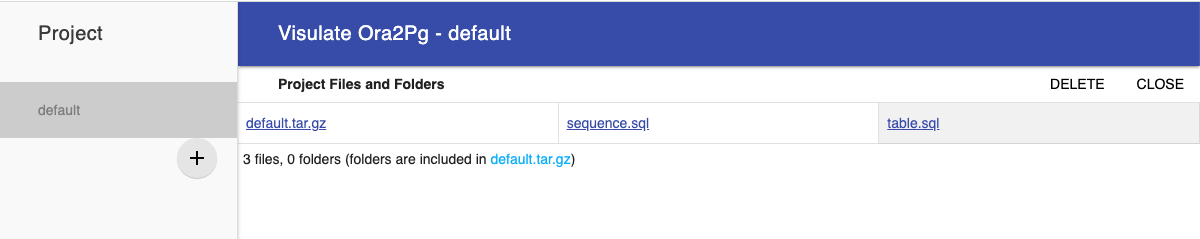

Hit the REVIEW button when generation completes to download the generated DDL

Review the generated DDL in a text editor and update the configuration settings if necessary. The default settings work for most implementations. Consider changing the DEFAULT_NUMERIC parameter from “bigint” to “numeric” for systems that require decimal precision. Note “bigint” is a better choice for sequence generated numeric keys so it may be simpler to edit the generated DDL. Test the DDL in a development database and then commit to source control.

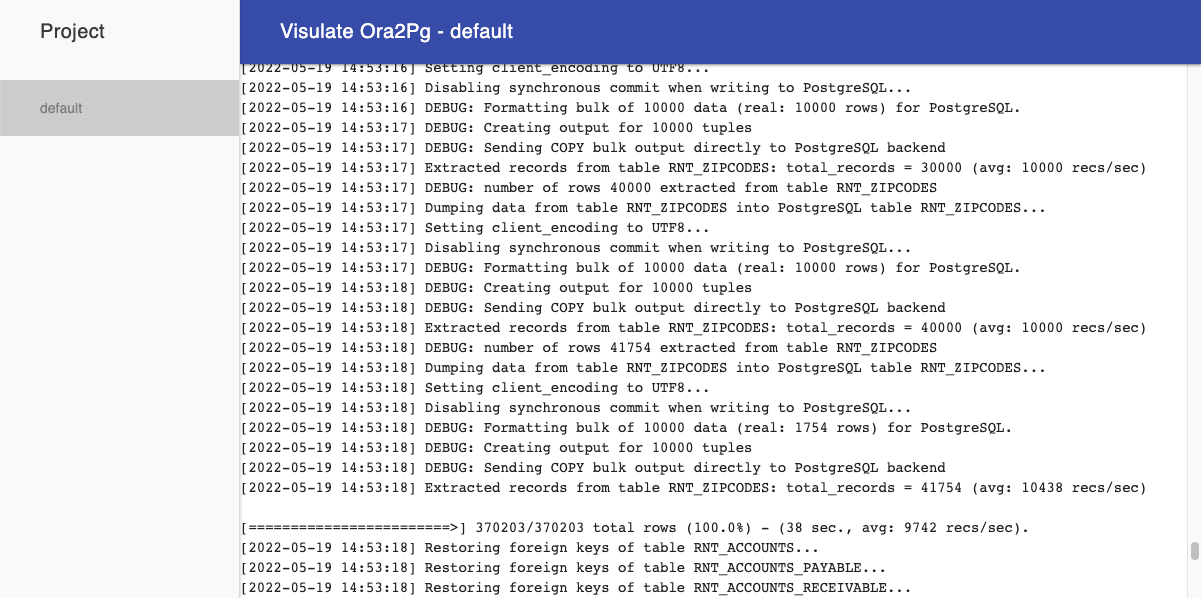

Enter the connect details for the development database in the output section of the UI, change the export type to COPY and hit RUN. This will read data from the Oracle schema and perform a bulk copy into Postgres.

Note: by default Ora2Pg will attempt to use the FDW extension for this - see Ora2PG now supports oracle_fdw to increase the data migration speed. Follow the oracle_fdw install instructions to enable this. Alternatively, use the Show advanced settings option of the UI to display and disable the FDW_SERVER and ORACLE_FDW_TRANSFORM parameters.

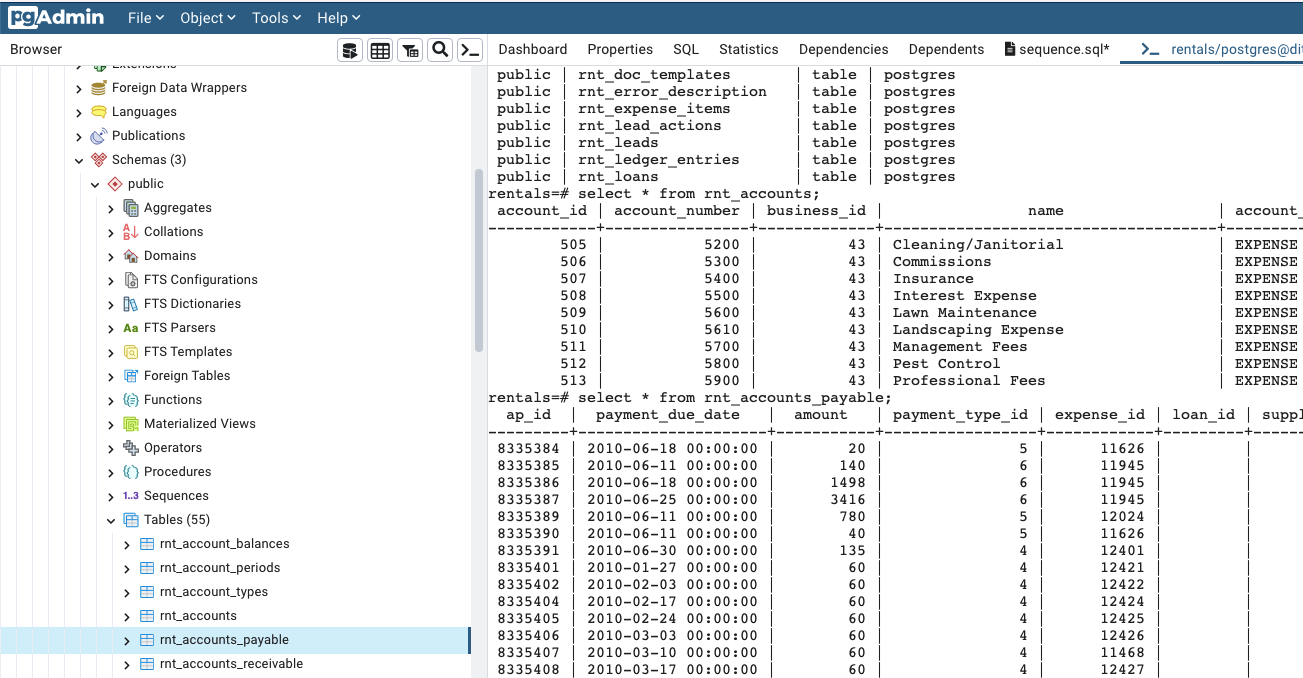

Connect to the development database when the COPY run completes and verify the result:

Optimize

At this point you should have a populated development database. The next step is to convert or rewrite the associated application code.

Convert Code

Create a branch or fork the application source code. Use Ora2Pg to generate PL/pgSQL functions based on functions, procedures, triggers and packages in the source database. Set the FILE_PER_FUNCTION parameter in the (advanced settings) OUTPUT section to generate a separate file for each. Download the result as a compressed tar file, uncompress and place its content under source control .

Review the generated code, modify as required then apply to the database. Follow the guidelines published by postgressql.org, Google and AWS. Use the same guidelines to convert SQL statements in application code.

Build the application and test its functionality and performance.

Rewrite Code

Older applications that follow client-server or 3-tier architecture patterns may be candidates for conversion to micro services. Follow the Software Engineering Institute Steps for Migrating Existing Applications to Microservices to analyze and redesign these systems.

Decide on a technology stack for API development

Express JS and Flask are good candidates for API development. Express supports JavaScript/Node JS development, Flask supports Python. Both frameworks can be used to create REST APIs that connect to a Postgres database.

The Express documentation recommends the pg-promise library for making Postgres connections as shown in the example below.

const pgp = require('pg-promise')(/* options */)

const db = pgp('postgres://username:password@host:port/database')

db.one('SELECT $1 AS value', 123)

.then((data) => {

console.log('DATA:', data.value)

})

.catch((error) => {

console.log('ERROR:', error)

})

Other alternatives include node-postgres and Sequelize. node-postgres is a lower level module. pg-promise is built on top of it. Sequelize supports object relational mapping (ORM). sequelize-auto can be used to reverse engineer ORM models from an existing schema.

Psycopg is the recommended library for connecting Flask applications to Postgres as shown in the example below.

import psycopg2

# Connect to your postgres DB

conn = psycopg2.connect("dbname=test user=postgres")

# Open a cursor to perform database operations

cur = conn.cursor()

# Execute a query

cur.execute("SELECT * FROM my_data")

# Retrieve query results

records = cur.fetchall()

SQL Alchemy provides an ORM alternative. Use its automap feature to reverse engineer ORM models

Production Migration

The production migration can occur once the application code changes are complete. Create a new database using the DDL that was checked into source control then identify a data migration approach. In some cases this may follow a similar process to the one that was used for development others may require an ETL pipeline.

Rerunning Ora2Pg in COPY mode is an option for systems that can tolerate a downtime and/or operate in read-only mode for the duration of the data migration. The Oracle database is restarted in read-only mode at the start of the migration. Ora2Pg is used to transfer the data. Once the transfer is complete data transformations can be applied in the Postgres database (e.g. to restructure for microservices).

The application can continue to support query access to the Oracle database while the data is being transferred and transformed. Control is switched to the new database and application code when the migration is complete.

Consider resizing the Ora2Pg VM to maximize CPU cores and memory before starting an Ora2Pg based migration. There are a number of Ora2Pg parameters that can be tweaked to maximize performance. Review the parameter values in the DATA, DATA_DIFF, PERFORMANCE and EXPORT sections of the Ora2Pg configuration. Experiment with different values and collect performance benchmarks on the data migration prior to the production run.

Systems that require zero downtime will need to set up an ETL pipeline for the migration. Google maintains an Oracle to Postgres toolkit to support this. It uses a Google Datastream Logminer plugin to extract data from the Oracle database and stage it in a cloud storage bucket. This triggers Pub/Sub notifications to a Dataflow process that loads the data into Postgres. See Migrate from Oracle to PostgreSQL with minimal downtime with Datastream for details.

There’s also the Oracle to PostgreSQL migration service currently in preview. It accepts an Ora2Pg configuration file as input which it uses to create a conversion workspace. Then the conversion workspace and connection profiles are used by jobs that perform an initial synchronization and change data capture.

Copyright © Dito LLC, 2023